Imagine if our computers could think more like us—learning from experience, adapting on the go, and doing all this while using just a fraction of the energy. That’s not science fiction anymore. Welcome to the world of Neuromorphic Computing 🧠—a field that’s redefining how machines process information by taking inspiration from the most powerful processor we know: the human brain.

🧬 What Exactly Is Neuromorphic Computing?

At its core, neuromorphic computing is about building systems that mimic the way biological brains work. That includes replicating neurons, synapses, and the way they fire signals—not in a rigid, step-by-step manner like traditional computers, but in a more dynamic, event-driven way.

Here are some key traits that set it apart:

- Spiking Neural Networks (SNNs) 🔁: These work more like actual neurons, only firing when necessary.

- Asynchronous Processing ⚙️: Things happen as needed, not based on a clock cycle.

- Event-Driven Operation ⏱️: Processing kicks in only when there's an input or change—this saves tons of power.

- Learning by Adapting 🧪: Systems can "rewire" themselves over time to improve performance, just like our brains do when we learn.

In other words, it’s not just about making machines faster. It’s about making them smarter, more efficient, and more adaptable.

💡 Real-World Examples That Are Already Making Waves

This isn’t just theory. Some big players have already made impressive strides in the field:

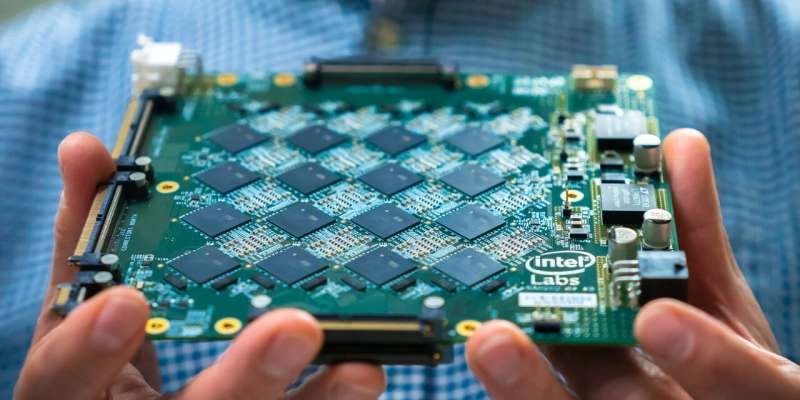

✅ Intel’s Loihi

Loihi is a research chip that learns on the fly and handles complex tasks like pattern recognition using significantly less power than conventional CPUs. Think of it like a brain-in-a-chip for AI at the edge.

✅ IBM’s TrueNorth

This chip has a million neurons and hundreds of millions of synapses—yet it only needs about 70 milliwatts of power. It’s being used in areas like image and speech recognition, and even in robotics.

✅ DARPA’s SyNAPSE Program

In the defense world, DARPA’s neuromorphic systems are helping develop smart drones and autonomous systems that can make decisions in real-time—without needing supercomputers on board.

⚙️ Why Should Developers Care?

If you work in AI, IoT, robotics, or cybersecurity, neuromorphic computing has massive implications for you.

- 🌱 Battery life: It’s incredibly energy-efficient, perfect for wearables and edge devices.

- ⚡ Speed: Real-time decision-making without the lag.

- 🔒 Security: Imagine detecting anomalies or cyber threats on the fly, without a central server.

Plus, it’s built for adaptability—something that traditional hardware often lacks.

🧠 The Future: Smarter, Not Just Faster

As AI models keep growing in size and complexity, we’re hitting walls with traditional processors. Neuromorphic computing offers a way forward—processing information in a more human-like, scalable, and sustainable way.

In cybersecurity, for example, neuromorphic chips could enable intelligent firewalls that evolve in real time, learning from new threats instead of just reacting to known ones.

And who knows? The next leap in general AI might not come from more GPUs, but from brains made of silicon.

“It’s not about building better machines—it’s about building machines that learn better.” – Unknown

🔍 Final Thoughts

Neuromorphic computing is still in its early days, but its potential is huge. Whether you're a developer, researcher, or tech enthusiast, now's the time to start paying attention. It’s not about replacing the brain—but learning from it.

If you're curious to dive deeper, I’d recommend exploring Intel’s Loihi project or checking out IBM’s TrueNorth chip.